Connecting with Microsoft AI and NVIDIA in Berlin

When Microsoft announced that they would be in the neighborhood with one of our favorite topics, we knew we had to attend the Microsoft AI Tour stop in Berlin. With an agenda full of fascinating topics it was hard to choose which sessions to attend for our technology and AI team.

The tenor of the event has been one of reliability and maturity. Microsoft and their event partner NVIDIA wanted to let everyone know that artificial intelligence is long past the experimental stage of proof-of-concepts and haphazardly assembled tech demos. AI has reached a point wherein scalability and establishment of best practices and operations is now more critical than sheer feasibility.

In this post we have broken down four of the key areas and what we have observed and learned.

Strong commitments to security and trust

„How many of you trust AI?“ started the speaker, and the answer became evident upon counting the hands in the audience–not many. Most AI users usually don’t really know what is happening under the hood, and it is difficult to predict the output. Even the researchers and scientists behind the technology are constantly discovering new behaviors and peculiarities of their models, not seen before.

As we all know, LLMs are usually trained on data from across the internet, thus prompting the clear question: What do they know about me, and if they will use what I said when talking to other users?

At this point, we all have many questions about our privacy when using AI. Therefore, Microsoft has unveiled the curtain behind their approach to securing AI and has shared what should be taken into account when building an AI-powered application.

At their core, LLMs and foundation models that have not been fine-tuned are difficult to trust: unpredictable hallucinations and with few guard rails to reign in their output.

However, using additional layers of safety on top of the foundation models, such as grounding it with high-quality knowledge bases and self-evaluating patterns and prompt instructions, developers can define safe corridors for reliable and consistent output and responses.

Safety is a critical component that stretches across the entire bandwidth of generative AI: from the data ingested to instructions during processing of input and output, all the way to disclaimers and labels in the user interface.

So our question should be reframed: Do I trust the developers behind the specific AI tool?

Apart from discussing general concepts and best practices, Microsoft has introduced its new Security Copilot, which will be available from May 1st and can function as a SOC Analyst. It can answer questions about the state of your Microsoft apps connected to the cloud, conduct security audits, and assist in debugging various application errors. Moreover, it can even alert you if an email you receive contains an attempted phishing attack.

Copilots, not autopilots

The vibrantly enthusiastic Seth Juarez summed it up perfectly: the current suite of AI that Microsoft can provide is not meant to run all on its own. You need to assist it in refining the desired output, guide it and tell it what worked and what maybe is not correct.

With multiple breakout and workshop sessions on how to build your own copilot throughout the day being completely overrun and people being turned away from overflowing rooms, it was obvious what topic is on everyone’s mind.

From copilots that monitor your systems and logs to report on potential vulnerabilities and breaches to copilots that summarize analyze business data and create reports with suggestions. The possibilities are endless and nearly every Microsoft product is now equipped with it’s own copilot feature.

But what is clear is that this plethora of different entry points into conversational interfaces also seems convoluted and raw. UX patterns and output formats vary greatly and the question arises: wouldn’t it be a lot more accessible and friendly to have a singular interface with the AI rather than having to jump between them constantly?

A better solution will likely be OS-integrated LLMs that provide standardized patterns or modules that application can tap into, but we are not quite there yet. Until then, every service and product will likely have its own standalone conversational interface before they will be consolidated in a swift move on the OS level.

RAG and AI search

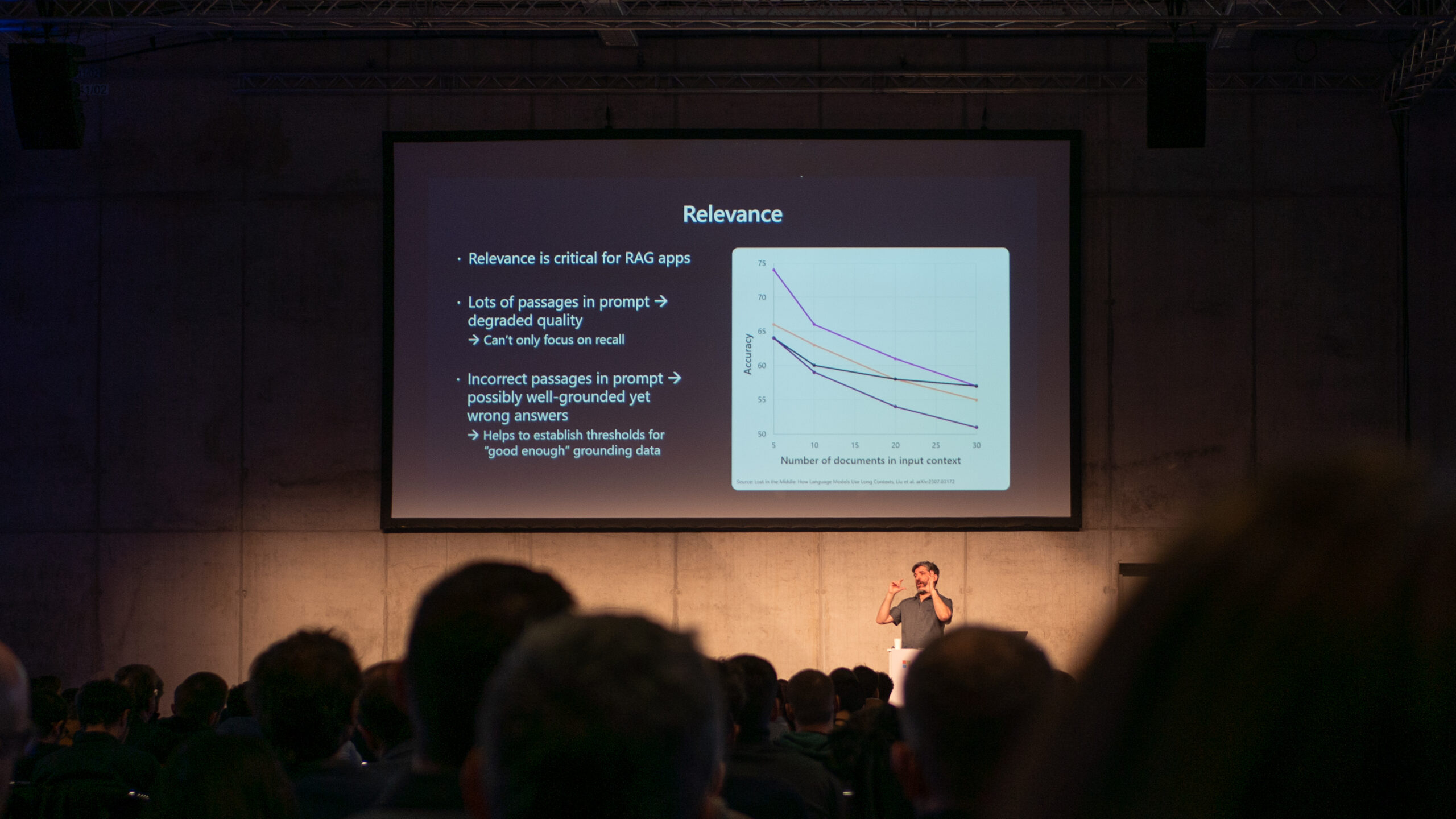

Vector search and retrieval-augmented generation (RAG) still has generative AI firmly in its grasp. The capabilities of vectorized databases for grounding are profusely necessary for reliable output and a significant reduction of hallucinations.

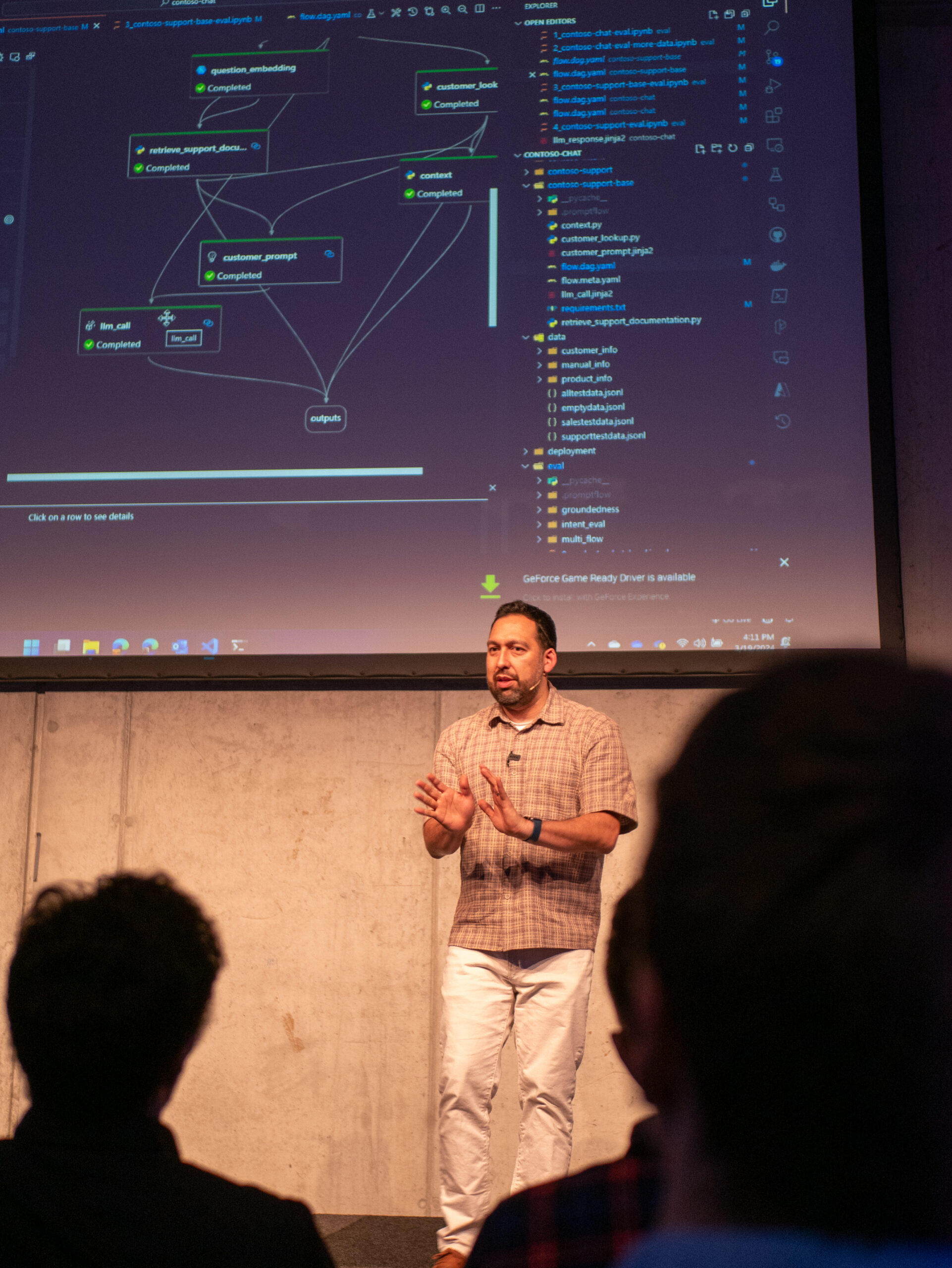

Both Microsoft and NVIDIA presented models and best practices for workflows for preprocessing and referencing of vector databases as well as demonstrations for Azure AI studio.

While it seems as though technological advancements have slowed a bit in this field recently, there were some takeaways for us that illustrated the necessity for indexing when handling large amounts of documents for the embedding model.

Establishing LLMOps for production-ready applications

What fell short in my eyes was the sharing of best practices on how to enable less tech-savvy people in organizations and provide building blocks for prompts or the development of pipelines of building blocks for prompts.

One of the biggest challenges of establishing acceptance of LLMs and AI within organizations is the fact that, while we are communicating on an intuitive level, natural language interfaces are not something we are accustomed to. Learning how to properly phrase prompts with their intransparent intricacies of what you should include and how, should be managed and accompanied with tooling that steers users accordingly.

A substantial part of building the right infrastructure should be the automated inclusion–or at least privision–of repeatedly and frequently used excerpts and prompt modules that get attached to prompts. If I am generating content for my marketing materials, I should not constantly have to paste instructions for the tone of voice of the output or camera settings for consistent image generation.

While Microsoft covered potential workflows for rewriting user queries before generating the final output, a lot of struggles and challenges of these enterprise-level ops management and tasks was unfortunately not mentioned.

In conclusion

Overall we greatly enjoyed the event, meeting fascinating people (some with the obligatory and adorned Apple Vision Pro) and listening to what drives and motivates others in the realm of artificial intelligence these days.

Thank you Microsoft and your efforts in outreach to developers and advocates!